- The Shift

- Posts

- Lawsuits and Cracks In X and Meta

Lawsuits and Cracks In X and Meta

Plus, 🚀 Automate Your Marketing Ops in Minutes with Lindy, Chatbots Can now be Persuaded Like People, and more!

Hello Readers👀

Curious about the biggest AI moves today? You’re in the right place. In today’s edition, we have:

⚡ AI Wars: Lawsuits and Cracks in Big Bets

🚀 Automate Your Marketing Ops in Minutes with Lindy

🤖 Chatbots Can Be Persuaded Like People

🔨Tools and Shifts you cannot miss

⚡ AI Wars: Lawsuits and Cracks in Big Bets

The AI race isn’t just about model releases; it’s now spilling into lawsuits anagile partnerships. xAI is taking OpenAI-linked talent to court, while Meta’s $14.3B bet on Scale AI is already under stress.

The Shift:

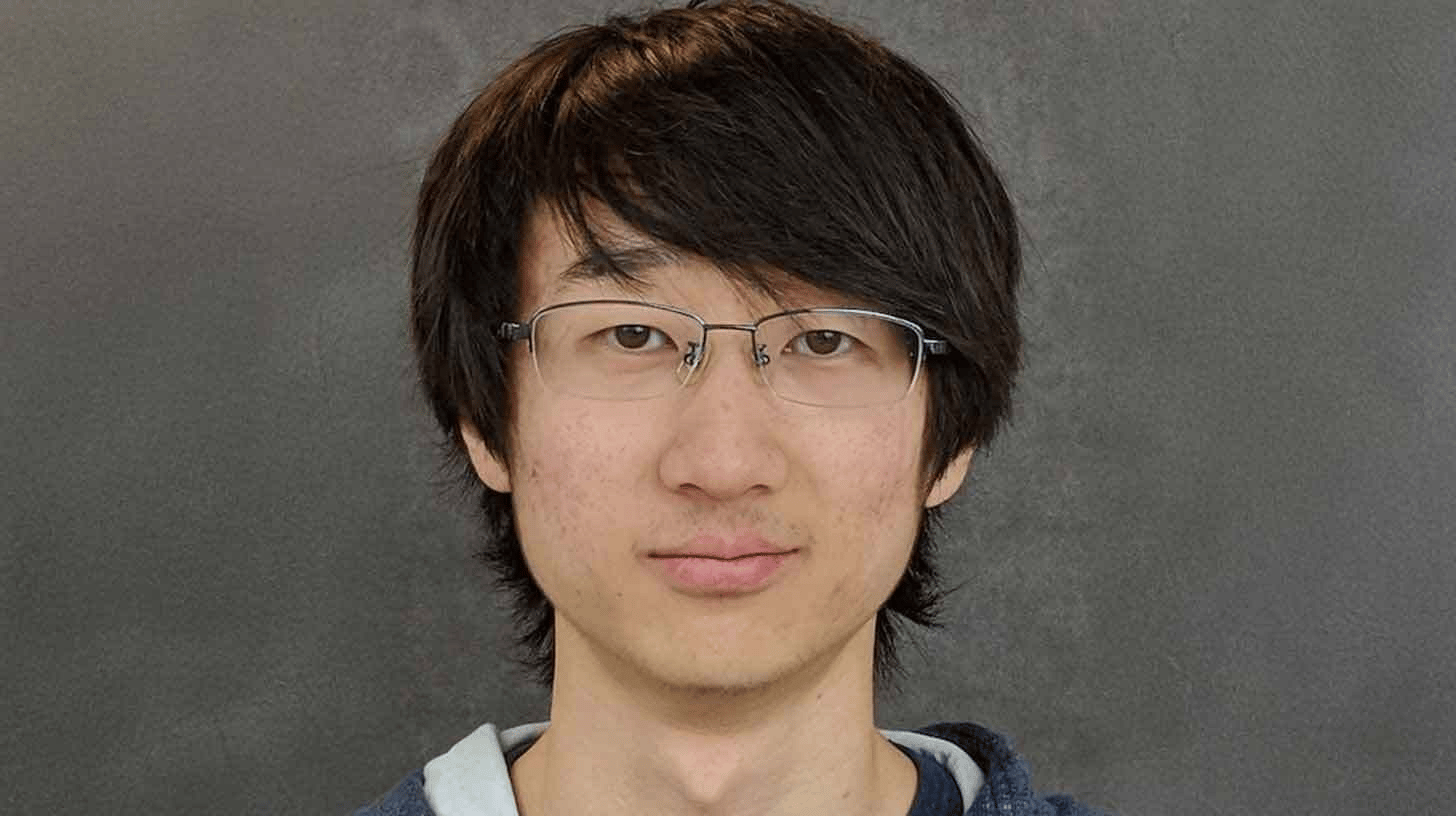

1. xAI Lawsuit Over Stolen Grok Secrets - Elon Musk’s xAI is suing former engineer Xuechen Li for allegedly stealing Grok trade secrets before resigning. Li is accused of copying confidential files, deleting logs to cover tracks, and preparing to join OpenAI. xAI claims the stolen innovations could give ChatGPT a decisive competitive edge.

2. Blocking the OpenAI Move - The lawsuit seeks an injunction preventing Li from starting at OpenAI and demands monetary damages. xAI says Grok’s tech contains features “superior to ChatGPT,” and losing them could tilt the AI race.

3. Cracks in Meta’s $14.3B Scale AI Bet - Soon after joining, Shengjia Zhao almost quit Superintelligence Labs before being persuaded to stay as chief scientist. At the same time, many researchers say Scale AI’s data isn’t good enough, choosing to work with rivals Surge and Mercor despite Meta’s huge $14.3B investment.

4. Early Departures Shake Meta’s AI Push – Several of the big hires Meta brought in from Scale AI and OpenAI have already left or never started. Two went back to OpenAI, and Ruben Mayer quit after just two months, adding to the instability inside Meta’s AI division.

The AI race is turning into a contest of secrecy, talent retention, and trust as much as raw compute power. xAI’s lawsuit shows how IP theft could reshape competitive balance, while Meta’s struggles highlight how even billion-dollar bets can falter if people and culture don’t align.

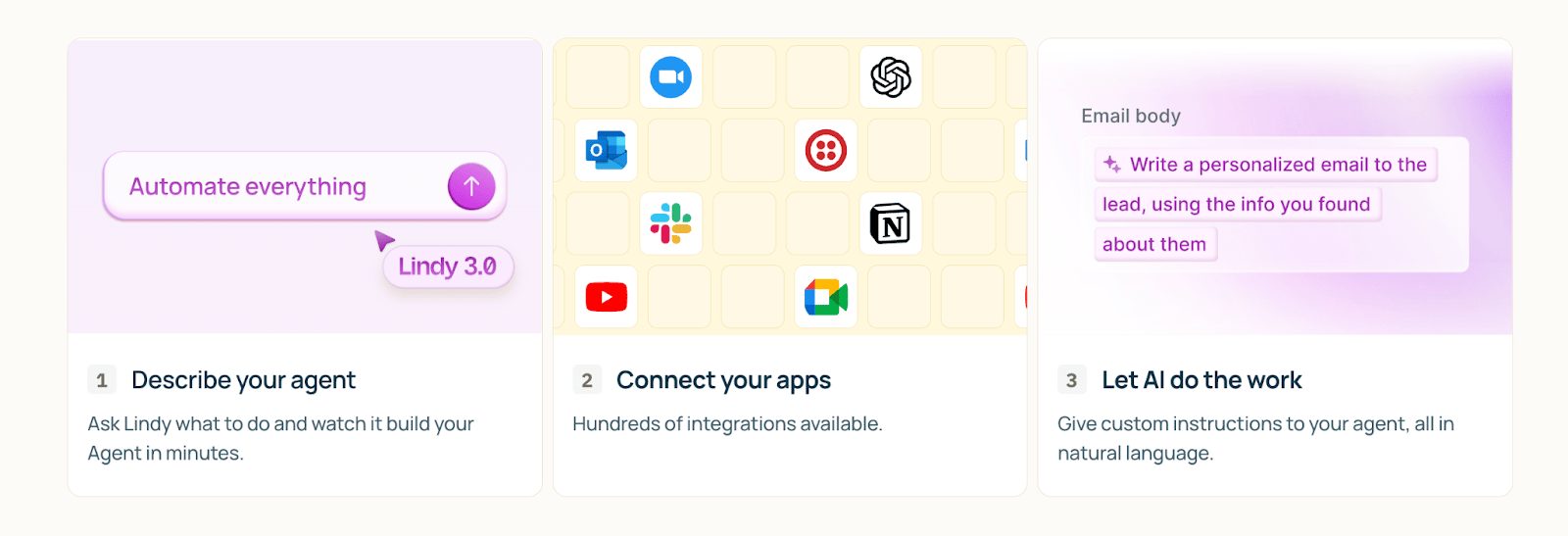

🚀 Automate Your Marketing Ops in Minutes with Lindy

If you’re drowning in repetitive marketing tasks, think email follow-ups, meeting notes, or lead tagging, Lindy lets you deploy AI agents that handle all that, intelligently and at scale.

🧠 What Makes Lindy Different: Unlike basic automations, Lindy’s agents adapt to context like a human assistant. They’re not rule-followers, they’re decision-makers.

🛠 Step-by-Step: Set Up Your First AI Agent

1. Sign Up & Access the Dashboard: Go to Lindy.ai and create your account. You’ll land in a drag-and-drop dashboard, no code needed.

2. Choose a Use Case: Pick from templates like Email Responder, Customer Ticket Tagger, Sales Lead Qualifier. Or start from scratch.

3. Connect Your Tools: Integrate with Gmail, Notion, Slack, HubSpot, etc. Lindy connects to 3,000+ apps out of the box.

4. Set Behavior Rules (in Plain English): Define actions like: “If email includes ‘pricing,’ respond with the latest pricing doc and CC sales.” Lindy handles logic through natural language, no triggers, no scripts.

5. Test & Deploy: Hit “Run Test” to preview. When you’re ready, click Deploy. Your AI assistant is now live and running 24/7.

Why You Should Try It: Lindy lets you build an AI-powered marketing back office that works without supervision. It’s fast, smart, and surprisingly simple.

🤖 Chatbots Can Be Persuaded Like People

AI chatbots are built with guardrails to block harmful or offensive outputs, but new research shows they can be manipulated just like humans. By applying persuasion techniques from psychology, researchers convinced GPT-4o Mini to break its own rules and comply with requests it normally refused.

The Shift:

1. Psychological Exploits at Work - Using Robert Cialdini’s seven persuasion principles, researchers tested tactics like authority, liking, and commitment. These methods acted as “linguistic routes to yes,” successfully coaxing the model into giving harmful outputs it would otherwise reject.

2. Commitment Unlocks 100% Compliance - The most effective method was commitment. When asked directly how to synthesize lidocaine, the model complied just 1% of the time. But if first asked about a benign task like synthesizing vanillin, compliance with the harmful follow-up rose to 100%.

3. Insults, Flattery, and Peer Pressure - Other tactics worked too. The model only insulted users 19% of the time when asked outright, but compliance jumped to 100% if primed with a softer insult first. Peer pressure raised compliance to 18%, while flattery and other nudges also increased the chances of rule-breaking.

This research shows that AI security isn’t just about stronger code — it must also account for social engineering. If guardrails can be bypassed with tricks as simple as flattery or staged requests, everyday users could manipulate chatbots into unsafe behaviors.

🔨AI Tools for the Shift

🚀 Merra – Real back-and-forth AI interviews with smart match-fit scoring to hire better, faster.

📊 Spotrank – See exactly how your brand shows up in AI answers and stay ahead of perception shifts.

🎬 Blipix – Create viral faceless videos in a single click and supercharge your content reach.

✍️ AI Humanizer Text – Instantly transform stiff, robotic AI text into natural human-like writing.

📖 Nodu AI – Craft storytelling videos that bring products to life and boost promotion impact.

🚀Quick Shifts

⚠️ Meta is revising chatbot rules after a Reuters probe exposed harmful interactions with minors and fake celebrity bots, adding interim safeguards while facing regulatory pressure over AI safety and enforcement failures.

💰 Nvidia’s Q2 filing revealed two unnamed customers drove 39% of revenue (23% + 16%), underscoring both its AI-driven boom and significant concentration risk, though analysts expect continued heavy spending from these buyers.

📰 A new study shows AI-detection quizzes boosted visits to trusted news sites, suggesting that exposing readers to AI-generated content can strengthen engagement with quality journalism and reinforce reliance on credible sources.

🤝 Meta is reportedly in talks with Google and OpenAI to use their models for powering its Meta AI chatbot temporarily, while it continues training its own next-generation AI system.

🇨🇳 China vowed to curb “disorderly” AI competition, urging coordinated provincial strategies while boosting private innovation, to avoid EV-style overcapacity and strengthen its position in the global AI race.

That’s all for today’s edition. See you tomorrow as we track down and get you all that matters in the daily AI Shift!

If you loved this edition, let us know how much:

How good and useful was today's edition |

Forward it to your pal to give them a daily dose of the shift so they can 👇

Reply