- The Shift

- Posts

- Your Browser Just Got a Brain

Your Browser Just Got a Brain

Plus, 🚨 Clone Any Ad. Swap the Face. Keep the Style, China’s Open-Source AI Just Leveled Up, Meet GLM-4.5 and Wan 2.2, and more!

Hello there! Ready to dive into another upgrading, Mind-boggling, and Value-filled Shift?

Today we have:

🧠 The Browser Is No Longer Passive, Copilot Mode Turns Edge Into an AI Assistant

🚨 Clone Any Ad. Swap the Face. Keep the Style.

🧠 China’s Open-Source AI Just Leveled Up, Meet GLM-4.5 and Wan 2.2

🔨Tools and Shifts you cannot miss

🧠 The Browser Is No Longer Passive, Copilot Mode Turns Edge Into an AI Assistant

Microsoft just launched Copilot Mode in Edge, blending search, chat, and action into a single AI-driven interface. It analyzes your open tabs, handles tasks, and helps you move through the web like a co-pilot, not just a browser.

The Shift

1. Your browser now sees the full picture - Copilot Mode reads across all your open tabs to understand what you're researching or comparing. It can help you make decisions faster, like comparing Airbnb listings or translating content without ever leaving the page. The experience is streamlined, with a chat-first tab view and personalized suggestions based on what you're doing.

2. Speak to search, act on intent - Edge now supports natural voice commands, letting you ask Copilot to open tabs, locate info, or even prep a side-by-side comparison. Soon, it may handle bookings, errands, and more, using browser history and credentials, if granted.

3. Copilot helps you stay in flow, not in tabs - Dynamic panels let you use Copilot without losing your place on the page, cutting through distractions like pop-ups or long blog posts. It will soon offer “journeys” that organize your past browsing by task or topic, helping you pick up where you left off.

Microsoft Edge is now leading the agentic browsing race with a real, privacy-first AI assistant built directly into the web experience. Copilot Mode marks a shift from passive browsing to active collaboration, where the browser helps users search, compare, and act with less friction.

🚨 Clone Any Ad. Swap the Face. Keep the Style.

With Higgsfield Steal (Chrome Extension), you can recreate any ad from Instagram or Pinterest, and swap the model with yourself using Soul ID.

Here’s how to do it step-by-step:

1. Install the Chrome Extension: Download from here

2. Browse Instagram or Pinterest: Hover over any image (ad, photoshoot, product demo). Click the new “Recreate” button that appears.

3. Swap in Your Character (Soul ID): Choose your own trained identity, or use a default one. It replaces the actor/model while keeping the aesthetic, product, and vibe fully intact.

4. Get 4 Stylized Outputs Instantly: Higgsfield generates 4 high-quality clones in seconds, all with your character in the scene.

5. Animate & Add Voice (Optional): Take any image and make it talk with text-to-speech or lip-sync. Perfect for social ads, lookbooks, or viral videos.

⚠️ Use responsibly, or you’ll outcompete creators still doing manual shoots.

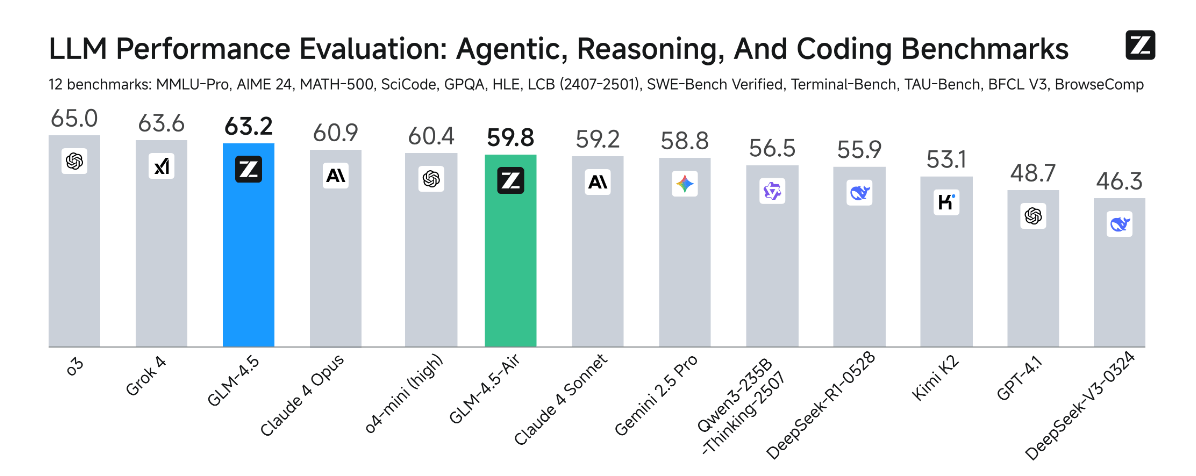

🧠 China’s Open-Source AI Just Leveled Up, Meet GLM-4.5 and Wan 2.2

Z.ai’s GLM-4.5 and Alibaba’s Wan 2.2 are pushing open-source AI into frontier territory. From coding agents to cinematic video gen, they now rival top closed models. And they’re fully open, fast to deploy, and ready for real use.

The Shift:

1. GLM-4.5 merges logic, coding, and tool use - It ranks top 3 globally with a 90.6% tool-call success rate and full-stack dev ability. The model supports multi-step reasoning and builds apps, games, and slides through natural prompts. Z.ai also released slime, its open RL framework for long-horizon agent training.

2. It wins real-world coding tasks, not just benchmarks - GLM-4.5 beats Qwen3-Coder in 80% of tasks and rivals Claude Sonnet in coding workflows. It handles full web apps, live debugging, and autonomous project builds. It's fully open-source with HuggingFace weights, API access, and Claude Code integration.

3. Wan 2.2 AI brings open video gen with pro control - The model outputs native 1080p, adds cinematic camera moves, and runs on a dual-expert system. It was trained on 66% more images and 83% more videos than Wan 2.1. Benchmarks show it outperforms Seedance, Kling, Hailuo, and Sora in realism and motion control.

4. Built for creators, studios, and global teams - Wan 2.2 supports bilingual prompts, fast LoRA training, and deploys instantly via VideoWeb AI. It’s open-source under Apache 2.0 and optimized for commercial work.

Open models are no longer trailing; they’re leading in real-world performance and usability. GLM-4.5 and Wan 2.2 show what’s possible when speed, transparency, and capability align. The next AI wave won’t just be closed or open, it’ll be who gets used first.

🔨AI Tools for the Shift

🎬 Keytake – AI Video Editor – Professional videos, one prompt away. Create polished edits using AI with zero manual effort.

🌍 AddSubtitle – Translate video subtitles into 100+ languages instantly. Perfect for creators, educators, and global teams.

📽️ AI PPT Maker – Turn your ideas into PowerPoint slides instantly with AI. Great for fast decks, pitch presentations, and classrooms.

🧍♂️ Background People Remover – Remove people from photos in seconds. Powered by Magic Eraser’s smart AI cleanup.

🗣️ Talk To Locals – Turn your phone into a two-way voice translator for live multilingual conversations.

🚀Quick Shifts

🕶️ Alibaba will launch Quark AI Glasses in China by end-2025, featuring hands-free calls, translation, payments, and more, directly challenging Meta and Xiaomi in the race for AI-powered wearables.

🛍️ Chrome now lets you view AI-generated store reviews right from the URL bar, summarizing key details like service, quality, shipping, and returns to help you shop smarter at a glance.

🧠 Anthropic will introduce weekly rate limits for Claude Code starting August 28 to curb misuse by power users, account sharers, and resellers, impacting fewer than 5% of subscribers, they claim.

🚗 Tesla has inked a $16.5B deal with Samsung to produce its next-gen AI6 chips, which will power everything from FSD to Optimus robots and AI data centers.

That’s all for today’s edition see you tomorrow as we track down and get you all that matters in the daily AI Shift!

If you loved this edition let us know how much:

How good and useful was today's edition |

Forward it to your pal to give them a daily dose of the shift so they can 👇

Reply